Who should die – moral maze for motors

Whose lives are most valuable? This is the question being discussed by developers of self-driving cars.

These cars will be ubiquitous within a few years, but their release is being delayed partly due to the ethical “decisions” the cars need to be programmed to make.

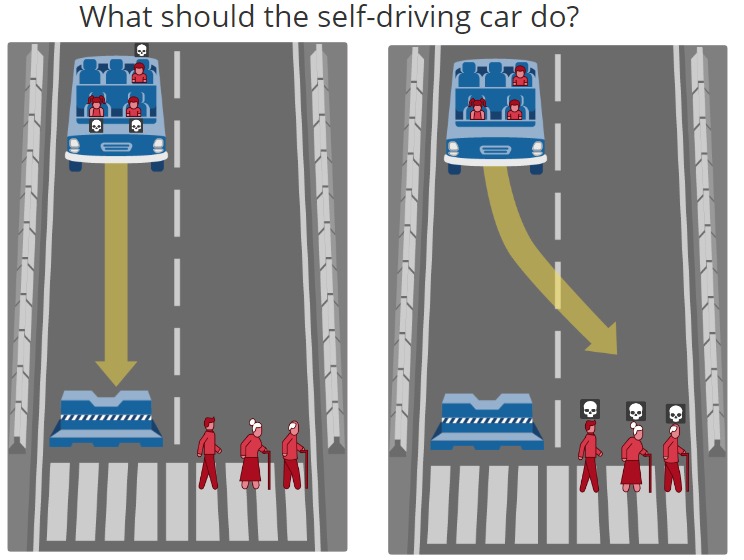

The moral dilemmas are these: what should the car do if in a split second it’s presented with an unavoidable accident where someone or something has to die. Who, or what should it kill?

For example if a self driving car full of people is driving autonomously at 70mph on the motorway and an animal steps out, the car has to make an instant pre-programmed moral judgement about whether to hit and kill the animal or swerve to avoid it and possibly crash the car, potentially killing some of the passengers. Should the car’s decision differ depending on the age of the people in the car or the type of animal?

Students at MIT have been evaluating the public’s view of this for the last few years by asking people to answer a moral car decisions quiz on their website called Moral Machine. The result so far differed by country, some wanted to save pedestrians over animals or over passengers and vice versa.

When we start choosing between humans it gets a whole lot more complicated. The website has scenarios asking what decision the car should make if it were a group of people that stepped out. The car has to decide whose life is most valuable to protect.

What about the moral behaviour of the people who might be killed (for example whether they caused the accident), or their age. Should the car take this into account?

These considerations create a big moral dilemma for the developers. Is it possible to programme a self driving car with morals that the world can universally accept?

Will different manufacturers have conflicting views based on their local cultural values so cars behave differently in each country or will all cars have the same ethics worldwide?

How will the world go about in finding and agreeing on the answer. Maybe through focus groups or voting but who is qualified to take part and how can this group construct and agree on a view of what is morally right and wrong?

Playing God

The Bible tells us every life of a human matters and that only God can decide what is morally right. He sets the standards, He is the ultimate authority, but He is also the perfect judge. Unlike us, he knows all the facts, all the context and all the options. Trying to programme a morally responsible robot makes us recognise that we are not God.

Romans 3:23 reminds us how we’re morally flawed so we cannot aspire to be God. This taints all our work so although these cars are amazing, they will ultimately be flawed as they’re made by imperfect people and this will be reflected in the moral decisions the car makes.

The significance of this moral dilemma for our future is that these driving computers will ultimately make decisions about the value of our lives. The developers have to choose who lives or dies, they need to make a judgement. However the Bible tells us (in 2 Cor 5:10) that the only judgement that really matters is God’s. It says how we will all be judged by his son Jesus Christ, who will be the decision maker for our eternal future.

The self-driving car’s judgement will be based on humanly programmed decisions and imperfect decisions based on limited human knowledge, whereas God knows everything we have ever done, every wrong decision and all our motivation.

That is why we are so grateful for Jesus, who lived a perfect life and made perfect decisions, to stand in our place before God so that when we meet our maker he sees Jesus’ life, not our imperfect morally flawed life, so we can be set free.

Written by Tom Warburton